Bill Trippe and I were speaking with someone at a mid-sized education publisher the other day, and we heard a well-informed and articulated series of complaints about the Kindle format. The frustration behind these comments was palpable: Kindle is where so much of the early and encouraging growth of the ebook market has happened, but the E-ink display and .AWZ format is really not good for any content beyond straight-forward text constrained within narrative paragraph structure. While such constraint works fine for many titles, any publisher producing educational, professional, STM, or any other even moderately complex content has to compromise way too much.

Book publishers still not committing to the ebook market certainly like the news of the potential—Forrester’s James McQuivey, with the projection of the ebook market hitting $3 billion in sales sometime soon, is the latest word, perhaps—but these same book publishers, who after all, are the ones having to do the work, find themselves wondering if they can get there from here. Hannah Johnson, at PublishingPerspectives, posted a blog titled “Forrester’s James McQuivey Says Digital Publishing is About Economics, Not Format” The post is on the post by James McQuivey of Forrester Research about the projected growth of ebook sales and the emphasis on economics, not formats, when assessing ebooks’ future.

McQuivey’s point is right, of course, although it isn’t a startling conclusion, but one more on par with pointing out that, for print books, it matters very little whether the title comes out in hard cover or paperback, or in one trim size over another. Still, in today’s ebook hysteria, it remains valuable to point out the sensible perspective.

In book publishing, the main consideration is producing a book that is of strong interest to readers, while also making sure that these readers can get their hands on the title in ways that produce sufficient monetary gain for the publisher. The only reasons why ebook formats are such a concern at the moment is that the question of ebook formats is a new one that book publishers are struggling to figure out how to implement, even while the marketplace for any and all such ebook formats remains nascent.

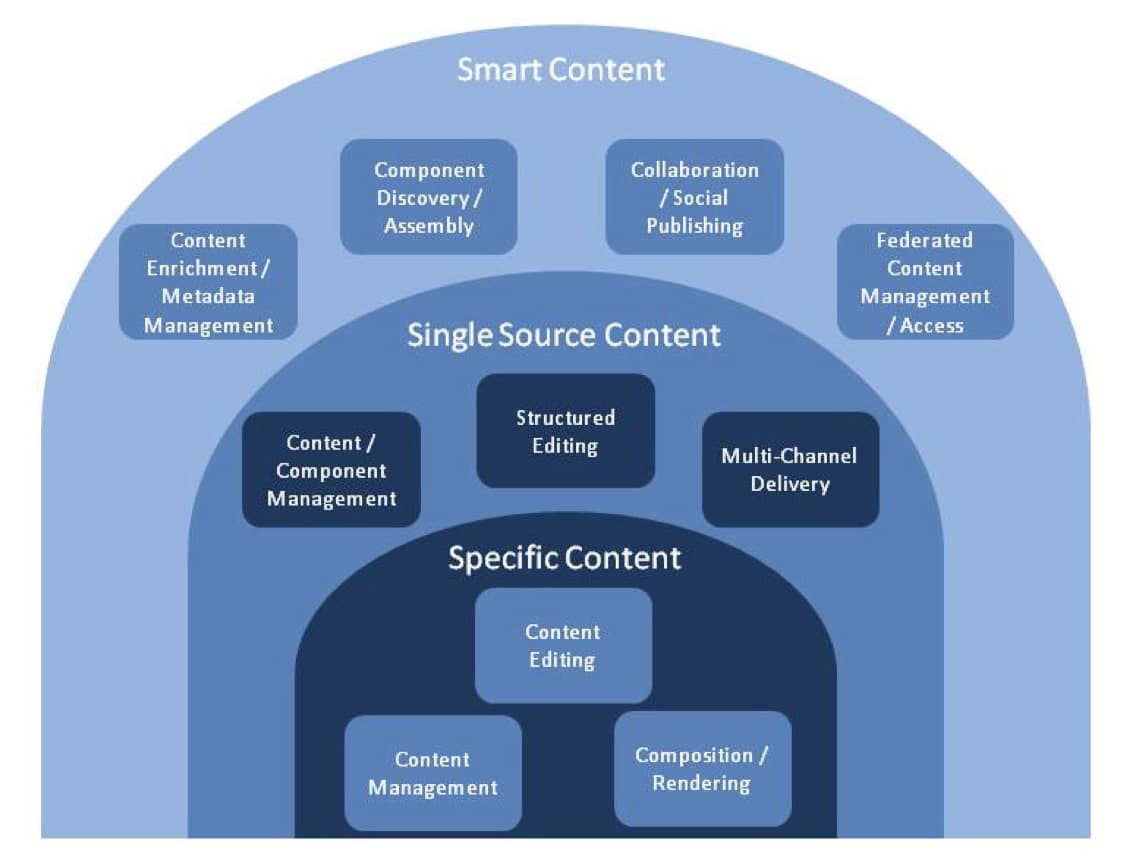

The Gilbane Group has been in the business of helping companies with all kinds of content—including publishers of many stripes—more effectively manage their content and get it to those who need it, at the right time, in the right form. Our recent 277-page study, A Blueprint for Book Publishing Transformation: Seven Essential Systems to Re-Invent Publishing (which is free, for download, by the way), discusses the issue of ebook formats and makes the point that book publishers need to move toward digital workflow—and, preferably, XML-based—as early in the editorial and production process as possible, so that all desirable formats the publisher may want to produce now and in the years ahead can be realized much more efficiently and much less expensively. One section in our industry forecast chapter is titled, “Ebook Reader Devices in Flux, But so What?”

But good strategic planning in book publishing doesn’t necessarily resolve each and every particular requirement for market success, and given the confused state of ebook format s and their varying production demands, we’re developing our next study that drills down on this very issue. Working title: Ebooks, Apps, and Formats: The Practical Issues.

Stay tuned. Drop a line.