The annual analyst keynote panel at the sixth annual Gilbane Boston Conference, produced by The Gilbane Group and Lighthouse Seminars, to take place December 1- 3, 2009, in Boston, MA, hosts leading industry analysts who will debate What’s Real, What’s Hype, and What’s Coming in content management and collaboration. Industry analysts from different firms speak at all Gilbane events to make sure conference attendees hear differing opinions from a wide variety of expert sources. A second, third, fourth or fifth opinion will ensure IT and business managers don’t make ill-informed decisions about critical content and information technologies or strategies. Some of the topics to be debated are: How the upcoming release of SharePoint 2010 & Office 2010 with affect the web and enterprise content management, search, and collaboration markets; What organizations are finding when they deploy enterprise social software; What companies should be doing about managing user-generated content; Whether it is time to seriously invest in mobile content applications, and; How companies are engaging customers with multi-lingual web sites. “Industry Analyst Debate: What’s Real, What’s Hype, and What’s Coming” will be a lively, interactive debate guaranteed to be both informative and fun. Participants include moderator, Frank Gilbane, CEO Gilbane Group, and panelists: Melissa Webster, Vice President, Content & Digital Media Technologies, IDC; Stephen Powers, Senior Analyst, Forrester; Dale Waldt, Senior Analyst, Gilbane Group; Kathleen Reidy, Senior Analyst, 451 Group; and Guy Creese, VP & Research Director, Collaboration and Content Strategies, Burton Group. Conference attendees are encouraged to come with questions, and can also suggest questions in advance via our social media channels or email. See http://gilbaneboston.com/conference_program.html#K2, http://twitter.com/gilbaneboston

Month: October 2009 (Page 2 of 3)

Microsoft has a lot to lose if they are unable to coax customers to continue to use and invest in Office. Google is trying to woo people away by providing a complete online experience with Google Docs, Email, and Wave. Microsoft is taking a different tact. They are easing Office users into a Web 2.0-like experience by creating a hybrid environment, in which people can continue to use the rich Office tools they know and love, and mix this with a browser experience. I use the term Web 2.0 here to mean that users can contribute important content to the site.

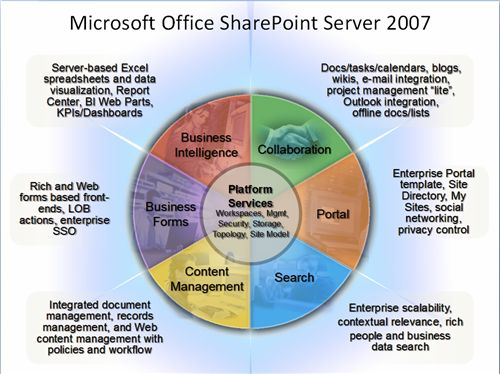

SharePoint leverages Office to allow users to create, modify, and display “deep[1]” content, while leveraging the browser to navigate, view, discover, and modify “shallow[1]” content. SharePoint is not limited to this narrow hybrid feature set, but in this post I examine and illustrate how Microsoft is focusing its attention on the Office users. The feature set that I concentrate on in this post is referred to as the “Collaboration” portion of SharePoint. This is depicted in Microsoft’s canonical six segmented wheel shown in Figure 1. This is the most mature part of SharePoint and works quite well, as long as the client machine requirements outlined below are met.

Figure 1: The canonical SharePoint Marketing Tool – Today’s post focuses on the Collaboration Segment

Preliminaries: Client Machine Requirements

SharePoint out-of-the-box works well if all client machines adhere to the following constraints:

- The client machines must be running Windows OS (XP, Vista, or WIndows 7)

NOTE: The experience for users who are using MAC OS, Linux, iPhones, and Google phones is poor. [2] - The only truly supported browser is Internet Explorer (7 and 8.) [2]

NOTE: Firefox, Safari, and Opera can be used, but the experience is poor. - The client machines need to have Office installed, and as implied by bullet 1 above, the MAC version of Office doesn’t work well with SharePoint 2007.

- All the clients should have the same version of Office. Office 2007 is optimal, but Office 2003 can be used. A mixed version of Office can cause issues.

- A number of tweaks need to be made to the security settings of the browser so that the client machine works seamlessly with SharePoint.

I refer to this as a “Microsoft Friendly Client Environment.”

Some consequences of these constraints are:

- SharePoint is not a good choice for a publicly facing Web 2.0 environment (More on this below)

- SharePoint can be okay for a publicly facing brochureware site, but it wouldn’t be my first choice.

- SharePoint works well as an extranet environment, if all the users are in a Microsoft Friendly Client Environment, and significant attention has been paid to securing the site.

A key take-away of these constraints is that a polished end user experience relies on:

- A carefully managed computing environment for end users (Microsoft Friendly Client Environment)

and / or - A great deal of customization to SharePoint.

This is not to say that one cannot deploy a publicly facing site with SharePoint. In fact, Microsoft has made a point of showcasing numerous publicly facing SharePoint sites [3].

What you should know about these SharePoint sites is:

- A nice looking publicly facing SharePoint site that works well on multiple Operating Systems and browsers has been carefully tuned with custom CSS files and master pages. This type of work tends to be expensive, because it is difficult to find people who have a good eye for aesthetics, understand CSS, and understand SharePoint master pages and publishing.

- A publicly facing SharePoint site that provides rich Web 2.0 functionality requires a good deal of custom .NET code and probably some third party vendor software. This can add up to considerably more costs than originally budgeted.

An important consideration, before investing in custom UI (CSS & master pages) , third party tools, and custom .NET code is that they will most likely be painful to migrate when the underlying SharePoint platform is upgraded to the next version, SharePoint 2010. [4]

By the sound of these introductory paragraphs, you might get the wrong idea that I am opposed to using SharePoint. I actually think SharePoint can be a very useful tool, assuming that one applies it to the appropriate business problems. In this post I will describe how Microsoft is transitioning people from a pure Office environment to an integrated Office and browser (SharePoint) environment.

So, What is SharePoint Good at?

When SharePoint is coupled closely with a Microsoft Friendly Client Environment, non-technical users can increase their productivity significantly by leveraging the Web 2.0 additive nature of SharePoint to their Office documents.

Two big problems exist with the deep content stored inside Office documents (Word, Excel, PowerPoint, and Access,)

- Hidden Content: Office documents can pack a great deal of complex content in them. Accessing the content can be done by opening each file individually or by executing a well formulated search. This is an issue! The former is human intensive, and the latter is not guaranteed to show consistent results.

- Many Versions of the Truth: There are many versions of the same files floating around. It is difficult if not impossible to know which file represents the “truth.”

SharePoint 2007 can make a significant impact on these issues.

Document Taxonomies

Go into any organization with more than 5 people, and chances are there will be a shared drive with thousands of files, Microsoft and non-Microsoft format, (Word, Excel, Acrobat, PowerPoint, Illustrator, JPEG, InfoPath etc..) that have important content. Yet the content is difficult to discover as well as extract in an aggregate fashion. For example, a folder that contains sales documents, may contain a number of key pieces of information that would be nice to have in a report:

- Customer

- Date of sale

- Items sold

- Total Sale in $’s

Categorizing documents by these attributes is often referred to as defining a taxonomy. SharePoint provides a spectrum of ways to associate taxonomies with documents. I mention spectrum here, because non-microsoft file formats can have this information loosely coupled, while some Office 2007 file formats can have this information bound tightly to the contents of the document. This is a deep subject, and it is not my goal to provide a tutorial, but I will shine some light on the topic.

SharePoint uses the term “Document Library” to be a metaphor for a folder on a shared drive. It was Microsoft’s intent that a business user should be able to create a document library and add a taxonomy for important contents. In the vernacular of SharePoint, the taxonomy is stored in “columns” and they allow users to extract important information from files that reside inside the library. For example, “Customer”, “Date of Sale,” or “Total Sale in $’s” in the previous example. The document library can then be sorted or filtered based on values that are present in these columns. One can even provide aggregate computations based the column values, for example total sales can be added for a specific date or customer.

The reason I carefully worded this as a “spectrum” is because the quality of the solution that Microsoft offers is dependent upon the document file format and its associated application. The solution is most elegant for Word 2007 and InfoPath 2007, less so for Excel and PowerPoint 2007 formats, and even less for the remainder of the formats that are non-Microsoft products.. The degree to which the taxonomy can be bound to actual file contents is not SharePoint dependent, rather it is dependent upon how well the application has implemented the SharePoint standard around “file properties.”

I believe that Microsoft had intended for the solution to be deployed equally well for all the Office applications, but time ran out for the Office team. I expect to see a much better implementation when Office 2010 arrives. As mentioned above, the implementation is best for Word 2007. It is possible to tag any content inside a Word document or template as one that should “bleed” through to the SharePoint taxonomy. Thus key pieces of content in Word 2007 documents can actually be viewed in aggregate by users without having to open individual Word documents.

It seems clear that Microsoft had the same intention for the other Office products, because the product documentation states that you can do the same for most Office products. However, my own research into this shows that only Word 2007 works. A surprising work-around for Excel is that if one sticks to the Excel 2003 file format, then one can also get the same functionality to work!

The next level of the spectrum operates as designed for all Office 2007 applications. In this case, all columns that are added as part of the SharePoint taxonomy can penetrate through to a panel of the office application. Thus users can be forced to fill in information about the document before saving the document. Figure 2 illustrates this. Microsoft refers to this as the “Document Information Panel” (DIP). Figure 3 shows how a mixture of document formats (Word, Excel, and PowerPoint) have all the columns populated with information. The disadvantage of this type of content binding is that a user must explicitly fill out the information in the DIP, instead of the information being bound and automatically populating based on the content available inside the document.

Figure 2: Illustrates the “Document Information Panel” that is visible in PowerPoint. This panel shows up because there are three columns that have been setup in the Document library: Title, testText, and testNum. testText and testNum have been populated by the user and can be seen in the Document Library, see figure 3.

Figure 3: Illustrates that the SharePoint Document Library showing the data from the Document Information Panel (DIP) “bleeding through.” For example the PowerPoint document has testText = fifty eight, testNum = 58.

Finally the last level on the taxonomy feature spectrum is for Non-Microsoft documents. SharePoint allows one to associate column values with any kind of document. For example, a jpeg file can have SharePoint metadata that indicates who the copyright owner is of the jpeg. However this metadata is not embedded in the document itself. Thus if the file is moved to another document library or downloaded from SharePoint, the metadata is lost.

A Single Version of the Truth

This is the feature set that SharePoint implements the best. A key issue in organizations is that files are often emailed around and no one knows where the truly current version is and what the history of a file was. SharePoint Document libraries allow organizations to improve this process significantly by making it easy for a user to email a link to a document, rather than email the actual document. (See figure 4.)

Figure 4: Illustrates how easy it is to send someone a link to the document, instead of the document itself.

In addition to supporting good practices around reducing content proliferation, SharePoint also promotes good versioning practices. As figure 5 illustrates any document library can easily be setup to handle file versions and file locking. Thus it is easy to ensure that only one person is modifying a file at a time and that the there is only one true version of the file.

Figure 5: Illustrates how one can look at the version history of a document in a SharePoint Document Library..

Summary

In this post I focus on the feature set of SharePoint that Microsoft uses to motivate Office users to migrate to SharePoint. These features are often termed the “Collaboration” features in the six segmented MOSS wheel. (See figure 1) The collaboration features of MOSS are the most mature part of SharePoint and thus the most . Another key take-away is the “Microsoft Friendly Client Environment.” I have worked with numerous clients that were taken by surprise, when they realized the tight restrictions on the client machines.

Finally, on a positive note, the features that I have discussed in this post are all available in the free version of SharePoint (WSS), no need to buy MOSS. In future posts, I will elaborate on MOSS only features.

—————————————–

[1] The terms “deep” and “shallow” are my creation, and not a standard. By “deep” content I am referring to the complex content such as a Word documents (contracts, manuscripts) or Excel documents (complex mathematical models, actuarial models, etc…)

[2] Microsoft has addressed this by stating that SharePoint 2010 would support some of these environments. I am somewhat skeptical.

[3] Public Facing internet sItes on MOSS, http://blogs.microsoft.nl/blogs/bartwe/archive/2007/12/12/public-facing-internet-sites-on-moss.aspx

[4] Microsoft has stated frequently that as long as one adheres to best practices, the migration to SharePoint 2010 will not be bad. However, Microsoft does not have a good track record on this account for the SharePoint 2003 to 2007 upgrade, as well as many other products.

Jive Software’s announcement last week of the Jive SharePoint Connector was met with a “so what” reaction by many people. They criticized Jive for not waiting to make the announcement until the SharePoint Connector is actually available later this quarter (even though pre-announcing product is now a fairly common practice in the industry.) Many also viewed this as a late effort by Jive to match existing SharePoint content connectivity found in competitor’s offerings, most notably those of NewsGator, Telligent, Tomoye, Atlassian, Socialtext, and Connectbeam.

Those critics missed the historical context of Jive’s announcement and, therefore, failed to understand its ramifications. Jive’s SharePoint integration announcement is very important because it:

- underscores the dominance of SharePoint in the marketplace, in terms of deployments as a central content store, forcing all competitors to acknowledge that fact and play nice (provide integration)

- reinforces the commonly-held opinion that SharePoint’s current social and collaboration tools are too difficult and expensive to deploy, causing organizations to layer third-party solution on top of existing SharePoint deployments

- is the first of several planned connections from Jive Social Business Software (SBS) to third-party content management systems, meaning that SBS users will eventually be able to find and interact with enterprise content without regard for where it is stored

- signals Jive’s desire to become the de facto user interface for all knowledge workers in organizations using SBS

The last point is the most important. Jive’s ambition is bigger than just out-selling other social software vendors. The company intends to compete with other enterprise software vendors, particularly with platform players (e.g. IBM, Microsoft, Oracle, and SAP), to be the primary productivity system choice of large organizations. Jive wants to position SBS as the knowledge workers’ desktop, and their ability to integrate bi-directionally with third-party enterprise applications will be key to attaining that goal.

Jive’s corporate strategy was revealed in March, when they decreed a new category of enterprise software — Social Business Software. Last week’s announcement of an ECM connector strategy reaffirms that Jive will not be satisfied by merely increasing its Social Media or Enterprise 2.0 software market share. Instead, Jive will seek to dominate its own category that bleeds customers from other enterprise software market spaces.

Fifth in a series of interviews with sponsors of Gilbane’s 2009 study on Multilingual Product Content: Transforming Traditional Practices into Global Content Value Chains.

We spoke with David Smith, president of LinguaLinx Language Solutions, a full-service translation agency providing multilingual communication solutions in over 150 languages. David talked with us about the evolving role of the language service provider across the global content value chain (GCVC), their rationale for co-sponsoring the research, and what findings they consider most relevant from the research.

Gilbane: How does your company support the value chain for global product support?

Smith: As a translation agency, we’ve realized that our involvement with global content should be much earlier in the supply chain. In addition to localization, we support clients in reducing costs and increasing efficiencies by providing consulting services that revolve around the content authoring process – from reuse strategies and structured authoring best practices to maximizing the inherent capabilities of content management and workflow systems. Rather than just adapting content into other languages, we assist with its creation so that it is concise, consistent and localization-friendly.

Gilbane: Why did you choose to sponsor the Gilbane research?

Smith: Of the many organizations and associations we belong to, we find that the research and topics of Gilbane studies and conferences alike most closely align with our interest and efforts to diversify our services and become a turn-key outsourced documentation consultancy as opposed to a traditional translation agency.

Gilbane: What is the most interesting/compelling/relevant result reported in the study?

Smith: The findings present two major points that we feel are relevant. First, there is definitely wide-ranging recognition of the benefits derived from the creation of standardized content in a content management system integrated with a localization workflow solution.

Secondly, there are many, many different ways of approaching the creation, management, and publishing of global content. There’s often a significant gap between the adoption of global content solutions – such as authoring software, translation management software, workflow linking different technologies – and the successful implementation of these solutions among those responsible for day-to-day content creation and delivery. A major manufacturer of GPS technology is actually authoring directly in InDesign to a great extent even though it utilizes an industry-leading translation workflow tool – which provides an example of the lengths to which internal processes must be changed to realize truly efficient global content processes.

For more insights into the link between authoring and translation and localization, see the section “Achieving Quality at the Source” that begins on page 28 of the report. You can also learn how LinguaLinx helped New York City Department of Education communicate with 1.8 million families across 1,500 schools in which 43% of students speak a language other than English at home. Download the study for free.

Syncro Soft released <oXygen/> XML Editor and Author version 11. Version 11 of <oXygen/> XML Editor comes with new features covering both XML development and XML authoring like: XProc support, integrated documentation for XSLT stylesheets, a new XQuery debugger (for the Oracle Berkeley DB XML database), MathML rendering and editing support, a smarter Author mode for an improved visual editing experience and DITA 1.2 features. The support for very large documents was improved to handle documents in the 200MB range in the editor and 10GB in the large files viewer, the SVN support was upgraded with new features and a number of processors and frameworks were updated to their latest versions. <oXygen/> 11 contains also an experimental integration with EMC Documentum Content Management System. <oXygen/> XML Editor can be purchased for a price of USD 449 for the Enterprise license, USD 349 for the Professional license, and USD 64 for Academic/Non-Commercial use (for the latter, the support and maintenance pack is included). <oXygen/> XML Author can be purchased for a price of USD 269 for the Enterprise license and USD 199 for the Professional license. <oXygen/> XML Editor and Author version 11 can be freely evaluated for 30 days. You can request a trial license key from http://www.oxygenxml.com

So Microsoft was asleep at the wheel and didn’t use good procedures to backup and restore Sidekick data[1][2]. It was just a matter of time until we saw a breakdown in cloud computing. Is this the end to cloud computing? Not at all! I think it is just the beginning. Are we going to see other failures? Absolutely! These failures are good, because they help sensitize potential consumers of cloud computing on what can go wrong and what contractual obligations service providers must adhere to.

There is so much impetus for having centralized computing, that I think all the risk and downside will be outweighed by the positives. On the positive side, security, operational excellence, and lower costs will eventually become mainstream in centralized services. Consumers and corporations will become tired of the inconvenience and high cost of maintaining their own computing facilities in the last mile.

Willie Sutton, a notorious bank robber, is often misquoted as saying that he robbed banks "because that’s where the money is."[3] Yet all of us still keep our money with banks of one sort or another. Even though online fraud statistics are sharply increasing [4][5], the trend to use online and mobile banking as well as credit/debit transactions is on a steep ascent. Many banking experts suggest that this trend is due to convenience.

Whether a corporation is maintaining their own application servers and desktops, or consumers are caring and feeding for their MAC’s and PC’s the cost of doing this, measured in time and money is steadily growing. The expertise that is required is ever increasing. Furthermore, the likelihood of having a security breach when individuals care for their own security is high.

The pundits of cloud computing say that the likelihood of breakdowns in highly concentrated environments such as Cloud computing servers is high. The three main factors they point to are:

- Security Breaches

- Lack of Redundancy

- Vulnerability to Network Outages

I believe that in spite of these, seemingly large obstacles, we will see a huge increase in the number of cloud services and the number of people using these services in the next 5 years. When we keep data on our local hard drives, the security risks are huge. We are already pretty much dysfunctional when the network goes down, and I have had plenty of occasions where my system administrator had to reinstall a server or I had to reinstall my desktop applications. After all, we all trust the phone company to give us a dial tone.

The savings that can be attained are huge: A Cloud Computing provider can realize large savings by using specialized resources that are amortized across millions of users.

There is little doubt in my mind that cloud computing will become ubiquitous. The jury is still out as to what companies will become the service providers. However, I don’t think Microsoft will be one of them, because their culture just doesn’t allow for solid commitments to the end user.

—————————————-

[1] The Beauty in Redundancy, http://gadgetwise.blogs.nytimes.com/2009/10/12/the-beauty-in-redundancy/?scp=2&sq=sidekick&st=cse

[2] Microsoft Project Pink – The reason for sidekick data loss, http://dkgadget.com/microsoft-project-pink-the-reason-for-sidekick-data-loss/

[3] Willie Sutton, http://en.wikipedia.org/wiki/Willie_Sutton.

[4] Online Banking Fraud Soars in Britain, http://www.businessweek.com/globalbiz/content/oct2009/gb2009108_505426.htm?campaign_id=rss_eu

[5] RSA Online Fraud Report, September 2009, http://www.rsa.com/solutions/consumer_authentication/intelreport/10428_Online_Fraud_report_0909.pdf

The list of the 20 largest publishers in the world shows a profoundly changing landscape in book publishing. The chart below is provided by Rüdiger Wischenbart from Publishing Perspectives in Germany. He has contributed some good insights into the transformation of the publishing industry. I offer my analysis on the state of the industry and its future.

Some publishers are fairing much better economically, while others are steadily sliding downward in revenue and in their global standing. The changing dynamics between the professional information, education and trade sectors has affected this year’s ranking. The good news is that publishers that have reinvented themselves (responded to market demand by listening to the customer) have done much better than most.

Pearson, Thomson Reuters, Cengage are identified as star performers on the list. Four out of five dollars is generated through the digital integrated value chain. The digital content and e-book industry for professional information content is the high growth segment of the publishing industry. As an industry, we are weak in our recognition of the current size and opportunity of the digital marketplace. Education publishers and trade publishers are having trouble evolving. There is broad need for knowledgeable skilled digital workers, experienced strategic thinkers, scalable and flexible technology infrastructure, and streamlined workflow/processes that allow publishers to execute on updated strategic initiatives.

Asian publishers are becoming a force, as they are in many other market segments. They include companies like Korea’s Kyowon and China’s Higher Education Press. Their strong suit is “localizing” content (i.e. cultural adaptation), and the power and economics of a huge growing audience. They are hungry. They want their piece of the pie.

Trade publishers, experiencing a steady decline in revenues, are poorly positioned to compete. However, the strong performance of Penguin and Hachette are current exceptions in this segment. It remains to be seen if trade publishers can transform into a sustainable business model. Trade’s poor performance and outlook is due to several reasons, beginning with the fact that they have the farthest to go to find and serve today’s and tomorrow’s readers.

We have seen endless debate in trade on digital pricing and searches for new business models. The best solutions will leverage and be respectful of the stakeholders…all of them! That includes, but is not limited to; authors, agents publishers, libraries, distributors, wholesalers, physical bookstores, digital bookstores, printers, service providers, the media, reviewers, technology companies, etc. If publishers burry their heads in the sand by refusing to experiment with new content, pricing models, and sales channels, then there will be serious trouble.

On the bright side, if publishers aggressively discuss new ways to sell content with their channel partners, and seek out non-traditional channel partners that have the audiences with the demand for their products, there is the potential, not to just maintain current revenue, but to actually grow the size of the pie. I know that is a radical statement to make, yet the ‘book’ is being redefined, and publishing is becoming something new.

Several key findings:

- The majority of Top Ranked Global Publishers are based in Europe.

- Professional/knowledge, STM publishers have course corrected and are doing well.

- The first major Asian Publishers are positioning towards competing as top global players.

- Education sector is unstable.

- Trade Publishers are, and will be, hit the hardest in the rapidly emerging digital marketplace.

- Publishers that have reinvented themselves…are prospering!

I have high hopes for the publishing industry. However, until we can meet Peter Drucker’s market-centric definition where he says “…the aim of marketing is to make selling superfluous. The aim of marketing is to know and understand the customer so well that the product or service fits him and sells itself.” Are we there yet? When we achieve this value statement the industry will once again be healthy. As for me…being part of the solution? I am passionate about helping our clients build a stronger publishing industry that is focused on improving the reading experience.

What are you thinking now?

*The “Global Ranking of the Publishing Industry” is an annual initiative of Livres Hebdo, Paris, researched by Ruediger Wischenbart Content and Consulting, and co-published with buchreport (Germany), The Bookseller (UK) and Publishers Weekly (US).

Follow Ted Treanor on Twitter: twitter.com/ePubDr

Providing education on the business value of global information through our research is an important part of our content globalization practice. As we know however, the value of research is only as good as the results organizations achieve when they apply it! What really gets us jazzed is when knowledge sharing validates our thinking about what we call “universal truths” – the factors that define success for those who champion, implement and sustain organizational investment in multilingual communications.

Participants in our 2009 study on Multilingual Product Content: Transforming Traditional Practices into Global Content Value Chains told us that eliminating the language afterthought syndrome in their companies– a pattern of treating language requirements as secondary considerations within content strategies and solutions — would be a “defining moment” in realizing the impact of their efforts. Of course, we wanted more specifics. What would those defining moments look like? What would be the themes that characterized them? What would make up the “universal truths” about the remedies? Aggregating the answers to these questions led us to develop some key and common ingredients for success:

- Promotion of “global thinking” within their own departments, across product content domains, and between headquartered and regional resources.

- Strategies that balance inward-facing operational efficiency and cost reduction goals with outward-facing customer impacts.

- Business cases and objectives carefully aligned with corporate objectives, creating more value in product content deliverables and more influence for product content teams.

- Commitment to quality at the source, language requirements as part of status-quo information design, and global customer experience as the “end goal.”

- Focused and steady progress on removing collaboration barriers within their own departments and across product content domains, effectively creating a product content ecosystem that will grow over time.

- Technology implementations that enable standardization, automation, and interoperability.

Defining the ingredients naturally turned into sharing the recipes, a.k.a. a series of best practices profiles based on the experiences of individual technical documentation, training, localization/translation, or customer support professionals. Sincere appreciation goes to companies including Adobe, BMW Motorrad, Cisco, Hewlett Packard, Mercury Marine, Microsoft, and the New York City Department of Education, for enabling their product content champions to share their stories. Applause goes to the champions themselves, who continue to achieve ongoing and impressive results.

Want the details?

Download the Multilingual Product Content report

(updated with additional profiles!)

Attending Localization World, Silicon Valley?

Don’t miss Mary’s presentation on

Overcoming the Language Afterthought Syndrome

in the Global Business Best Practices track.